If, when a musical instrument sounds, someone would perceive the finest movements of the air, he certainly would see nothing but a painting with an extraordinary variety of colors.

- Athanasius Kircher, Musurgia Universalis (1650)

Concept

Artists and scientists have long been inspired by the beautiful and complex motion of fluids. The expressive potential of physical modeling is rarely used in the domain of audiovisual performance because physical simulation is computationally expensive and difficult to control. Melange is a real time audiovisual instrument that treats fluid flow as an expressive computational substance with intuitive gestural controls.

Melange served as my Masters project at UC Santa Barbara for the Media Arts and Technology Program.

Design

Melange was developed using TouchDesigner, C++, GLSL, and Python. TouchDesigner provides the backbone of the application, C++ was used for a custom plugin to retrieve depth map information from a RealSense SR300, GLSL was used to to compute the fluid and spring simulations in texture space, and Python was used to glue various parts of the system together.

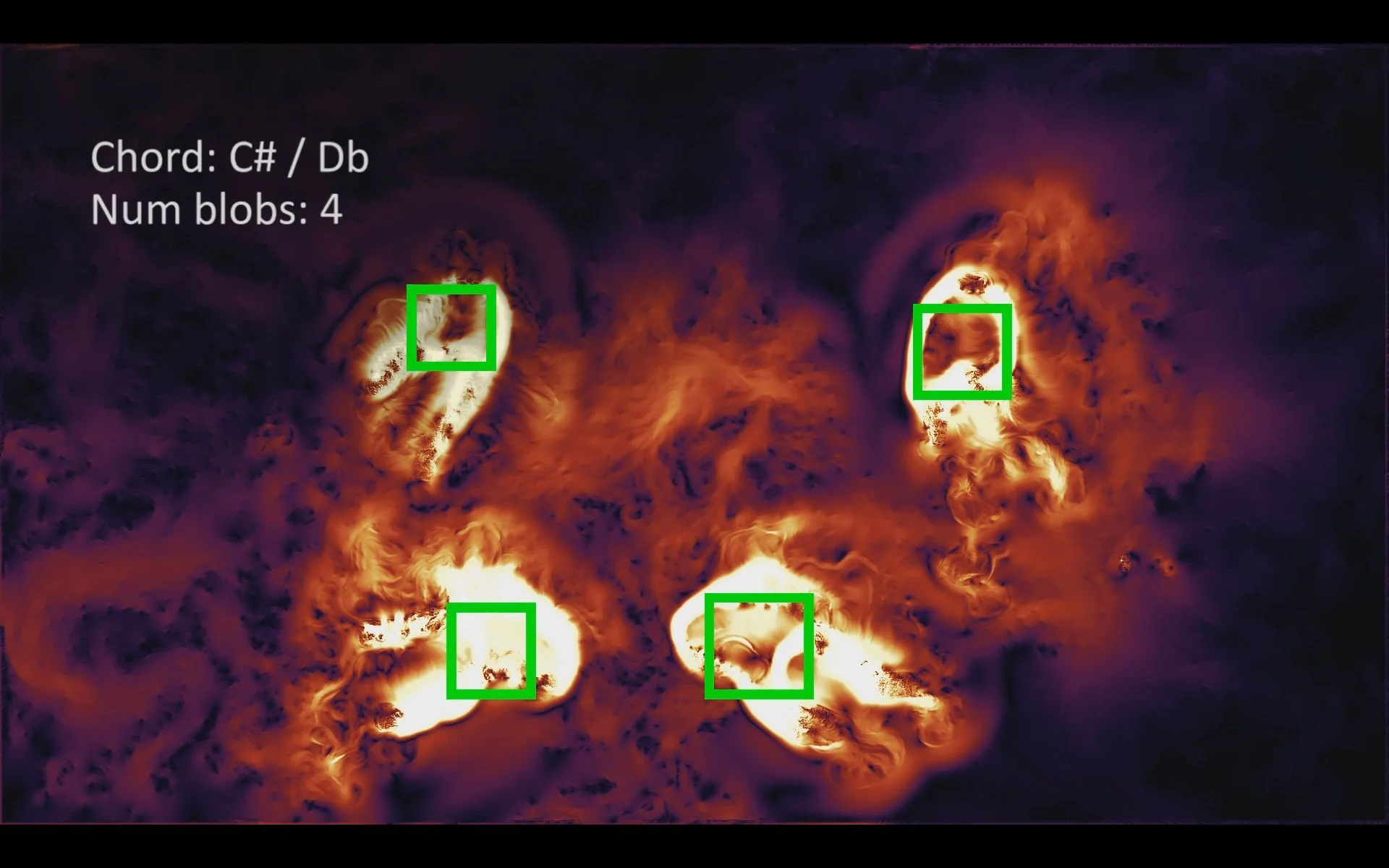

The system uses a depth sensing camera aimed at a table for tactile velocity input, and multiple one-dimensional spring-mass systems for sonification. Scanned synthesis was implemented on concentric rings of springs that were displaced by the velocity field, their deformed shape becoming the waveform which is scanned at audio rate. Using multiple springs and blob detection, a variety of chords can be produced.

The interaction design was based on musical instruments as ideal interfaces for performance: there is interaction with a physical object, coordinated hand and finger motions are crucial to audio output, and the sonic reaction is instantaneous. The surface of a table is a virtual interaction field onto which audiovisual material can be deposited. Anything that crosses the 1cm threshold - roughly the width of a fingertip - is added to the simulation.